Editor's note: Gibson is a virtual environment based on the real world to support perceptual learning, which is different from games or artificial environments. Gibson allows algorithms to simultaneously explore perception and movement.

The name of the Gibson environment comes from James J. Gibson, the author of the book Ecological Approach to Visual Perception. He once said: "We must perceive in order to move, but at the same time we must move in order to perceive."

Summary

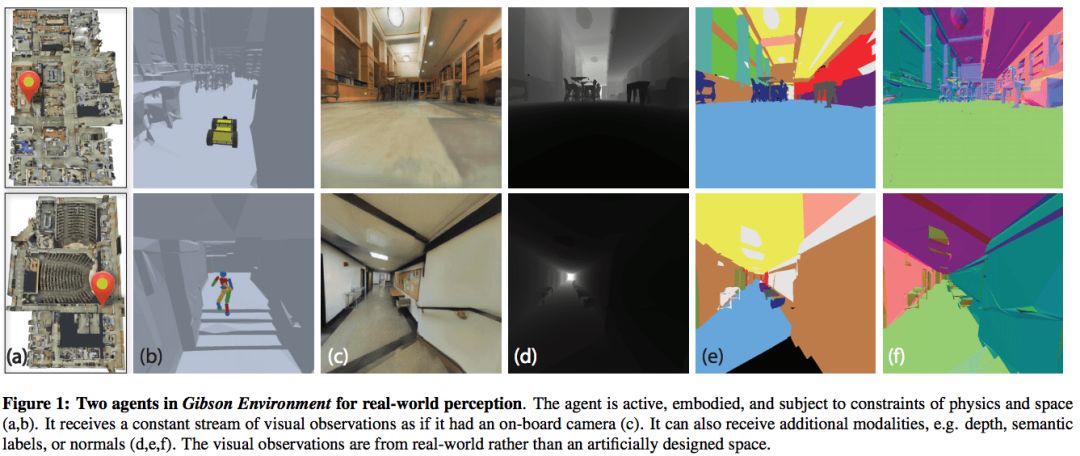

It is very difficult to create a visual perception model for an active agent and perform sensory motor control, because the current algorithms are slow, unable to perform efficient real-time learning, and robots are expensive and fragile. This gave birth to the "learning in simulation" approach, and the question that followed was whether the results could be transferred to the real world. In this paper, we studied the perception of the real world on active agents, and proposed the Gibson virtual environment, showing the sample perception task learned from it.

Detailed introduction

The ideal robot agent needs to have composite perception and physical capabilities, such as a drone that can automatically inspect buildings, a robot that can quickly locate victims in a disaster area, or a robot that can safely transport packages, and so on. In addition to the application perspective, the establishment of close connections between visual perception and physical movement is popular in many fields: evolutionary and computer science biologists have hypothesized that it is necessary to combine perception and perception in complex behaviors and types of agents. Movement requires a key role; neuroscientists believe that a joint relationship is needed between developing perception and staying active; roboticists also believe that the two functions should have a similar relationship. All this requires the development of a perceptual model, especially a model with active agents.

Generally, the agent we mentioned can receive vision from the external environment, and can also implement a series of actions accordingly, which can lead to substantial changes in the environment, or the agent itself makes certain changes. So how and where should such an agent be created?

First of all, there have been many related studies on how to build, from classic control problems to recent perceptual motion control, reinforcement learning, predictive motion, imitation learning, and so on. These methods usually assume that given an object observed from the environment, one or a series of actions are then formulated to complete the task.

Another key question is where the observations from the sensors come from. Traditional computer vision data sets are passive and static. Although it is possible to learn from reality, this is not an ideal scenario, because the learning rate must be real-time. If large-scale parallelism occurs, it will increase the computational cost. . Robots are also very fragile, which has led to the large-scale emergence of "learning in simulation". The first question is how to naturally generalize from the simulation of the real world and how to ensure:

The semantic complexity of the simulated environment accurately reflects the real world;

The rendered visual observation is similar to the effect captured by the camera (realism).

To solve this method, we proposed Gibson, a virtual environment for training and testing agents' understanding of the real world.

Gibson composition

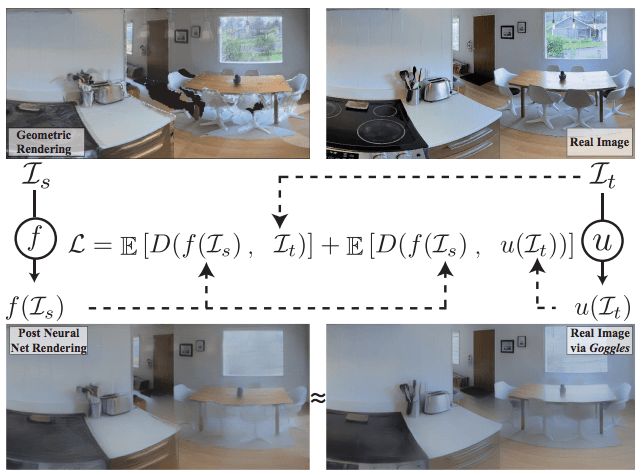

The main goal of Gibson is to help the model trained in the real environment to complete the migration. This process is divided into two steps. First of all, express your own semantic complexity in the real environment, and construct the environment based on the scanned real scene, instead of creating it based on the artificially rendered environment. After that, a mechanism was embedded to solve the difference between Gibson's rendering and the real camera.

Finally, the agent cannot distinguish between the results rendered by Gibson and the photos taken by the camera, so the difference in perception between the two is reduced a lot. This is due to the use of a neural network based on the rendering method, which makes the rendered image look more like a real photo, while another network can also make the real image more like the rendered result. The two functions are trained to produce the same output, so two regions can be connected.

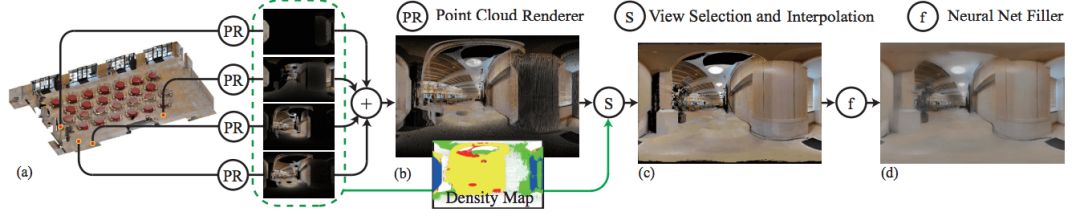

Gibson's structure includes a neural network based on visual synthesis and a physics engine. The composition of the visual synthesis system is shown in the figure:

It consists of a geometric point cloud renderer and a neural network, which can correct artifacts and fill in uncovered areas.

There are many imperfections in 3D input and geometric rendering, and it seems impossible to use neural networks to get real results like photos. So there is a huge difference between here and real photos. Therefore, we regard the rendering problem as constructing a public space to ensure that there is a correspondence between the real picture and the rendered picture.

Experimental result

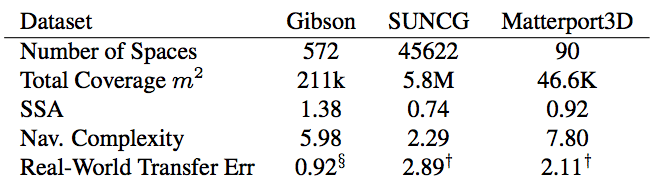

The data set used by Gibson comes from a variety of scanning devices, including NavVis, Matterport or DotProduct, covering a variety of different spaces, such as offices, garages, theaters, convenience stores, gyms, hospitals, and so on. All spaces are completely reconstructed in 3D and post-processed. We benchmarked Gibson and compared it with the existing synthetic data set. The specific parameters are as follows:

SSA stands for special surface area, which is the scale used to express the chaos of the model. Next, we compared the rendering effect of the model on the sample:

From top to bottom, there are pictures without neural network correction, pictures after neural network correction, real pictures seen by Goggles, and target pictures

Migrate to the real environment

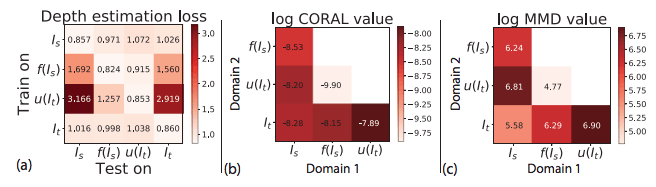

The 4×4 matrix in the figure below shows the evaluation scores of the migration from Gibson to the real scene, (a) shows the depth estimation error of all training tests; (b) (c) shows the distance between MMD and CORAL distribution.

Task resolution strategy

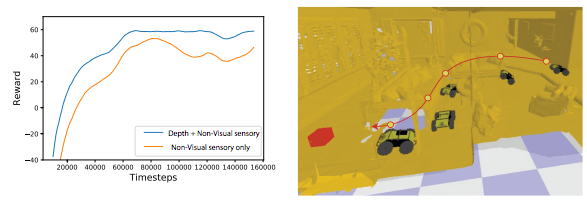

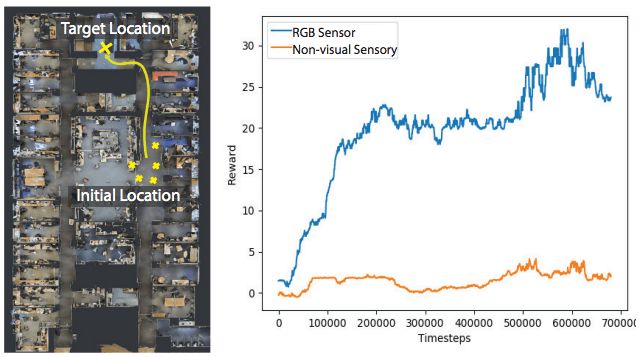

At the same time, after training, the model can design strategies to solve tasks based on rewards:

Route planning and obstacle avoidance

Long-distance navigation

Conclusion

Although the Gibson environment allows the agent in motion to have a good perception of the real world, it still has some shortcomings. First of all, although Gibson can learn complex navigation and movement, it currently cannot make other dynamic actions or manipulate it. This can be solved by combining with synthetic objects. In addition, we did not consider all the material characteristics, and there is currently no ideal physics simulator, which may lead to a gap between physics. Finally, we are basically migrating in static tasks. In the future, this model will still be applied to real robots.

5.00MM Wire To Board Connectors

5.00MM Wire To Board Connectors

5.0mm Wire to Board connectors are avialable in different terminations and sizes intended for use on a variety of applications. These connectors provide power and signal with different body styles, termination options, and centerlines. To find the wire to board set required, click on the appropriate sub section below.

5.0mm Wire To Board Connectors Type

5.0mm Terminal

5.0mm Housing

Pitch 5.0mm Wafer Right Angle&SMT Type

5.00MM Wire To Board Connectors

ShenZhen Antenk Electronics Co,Ltd , https://www.antenk.com