Lei Feng network press: Author Ai Wei, CTO of Yi Yi technology, graduated from University of Toronto with a major in computer engineering. For many years, Professor Steve Mann, the "Father of World Wearable Computers," has focused on the basic research of smart glasses. Recently, the pupil product of VMG-PROV of YIHUI has announced the open source for geeks. This video perspective mediated reality glasses. Why hasn't it officially launched? Why did you choose video perspective? The author answered this question with his personal experience of making products: “When we open VMG-PROV to open source, we hope to expose all problems with the product as soon as possible.†Apart from talking about the pros and cons of the product itself, this is an article. Seriously explore the article.

The following is the author's self-reporting, Lei Feng network did not adjust the original meaning of the editor.

I wrote a small article today to explain what exactly is VMG-PROV (the so-called open source geek version). I hope that my friends who have read this article will have a certain understanding of the “toys†that they are about to take in their hands. Of course, local tyrants are free. I think that VMG-RPOV is very handsome when she puts a hand at home.

|An open source blood

I remember when I went to Steve Mann’s lab, I saw a bunch of weird engineers (including a shirtless long-haired man) piled up with “broken†electronic devices, monitors, and various electronic components. They were surrounded by groups, but at this time they were doing something ambiguous with a very different interface. At the same time that I was inexplicably excited, I was also embarrassed by my own ignorance.

“Hi Steve, what software are they using?†I asked with curiosity, and I felt that the problem was weak.

"Oh Arkin, I can't answer that if it's not the right question." Professor didn't wear shoes, he had a soldering iron in his hand, and he didn't say anything back to me. Maybe he didn't know that I was petrified at the moment.

"You know, Arkin, we don't use any software in this Lab." Professor put down the soldering iron and looked over to me.

"Software are programs that you need to pay. Paying for software that you can't hack or share is ridiculous." The professor suddenly became serious. And I also understand why these programs are so strange.

I nodded and said thoughtfully: "So everything here is open-source? Like everything?"

The professor smiled and nodded. "Yes, Everything."

During this time in the lab, I came across a variety of open source tools. What the professor said is true. In the lab, our operating system is Ubuntu instead of Windows or MacOS. Image editing also uses GIMP instead of Photoshop. Even programs such as Word and Excel are replaced by LibreOffice. Even more exaggerating is that as a researcher, we don't even use Matlab. Instead, we use something called Octave.

Steve once worked as an artwork and is now in the Austin Art Museum. The Installation in the picture below is called “License To Sitâ€. It is a chair that you need to pay for a license to use. Just like paid software, this chair will remind you to pay for it when it is about to expire. Otherwise, once expired, the steel needle will pop up, forcibly ending the “sit†service that the chair can offer you. This artwork is very playful and can make us think about some funny situations when the rules of the digital world are applied to the physical world.

Looking at the chair, recalling the conversation I met with the professor for the first time, I suddenly realized that a decision I had considered for a long time had actually had its roots. The subtle influences were already full of open source blood.

| To the geeks

Recently, we decided to put VMG-PROV, an imperfect engineering machine, into the market as an open source hardware.

In this move, I think there are also selfish reasons for devotion.

We did solve some problems on smart glasses. The purpose of open source is to hope that peers and aspiring geeks can solve these problems without duplication through the dismantling and research of VMG-PROV. The wheel is here and there is no need to reinvent it. In addition, we do smart glasses that are different from VR/AR, slightly ahead of others. In order to make the ideal product as soon as possible, our small team of 20 people needs more help from others. When we open up VMG-PROV, we hope to expose all problems with the product as soon as possible. At the same time, the geeks that have common directions can work together and stand at the same starting line to perfect this product. Open source must be the direction of future science and technology. A small and successful geek should not always think about how to use the imperfect "little secret" to make money, but should think about how to get everyone involved to get things done as soon as possible.

We hope to deliver VMG-PROV to the right geek. This means that this generation of products is not consumer-grade or even developer-grade. We call it "geek version." We hope to sell him to geeks who have open source blood, want to explore digital vision, and have corresponding capabilities. Seeing here, I think the readers already have some small judgments about whether to buy VMG-PROV.

| Why choose video perspective?

VMG (including PROV in pre-sale and MARK in R&D) is an open source heads-up display and is a tool to explore mediation of reality (and digital vision). We believe that virtual reality (VR) and augmented reality (AR) are all examples of digital vision. Therefore, VMG must first be able to VR/AR compatible with a video presentation technology through a single head display. A small article on smart glasses that was written previously also mentions that, from AR development to Mixed Reality or Mediated Reality, video perspective is a must to cross.

People often ask me that there are so many optical perspective solutions on the market, and everyone is doing optical perspective. Why do you have to choose video perspective? I said that I did not choose video perspective, video perspective selected me.

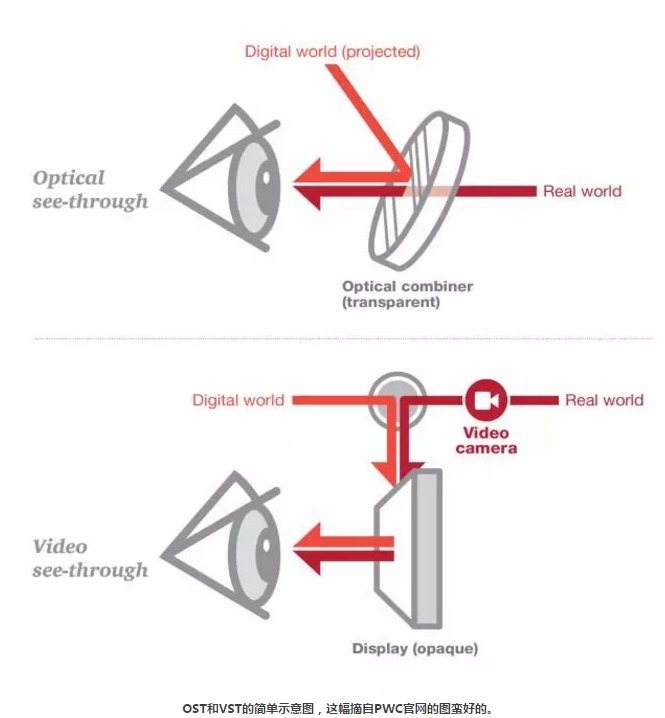

Before I was in the lab, I was learning FPGA. Because of this, Steve asked me to use FPGA to overcome some GPU algorithms that couldn't run on the smart glasses. After the results, I and a senior student showed off the results of our research at SIGGRAPH. At this exploding exhibition, I and my seniors each identified future research directions in the chat. After returning to the lab, I mainly engaged in video perspective and related software, and he began to study optical perspective. Video See-Through (or VST) and Optical See-Through (or OST) like HoloLens have their own advantages and disadvantages. Below, I use the AR application as an example to summarize the comparison of the two main problems. (Academic circles are very thorough about the two, and everyone is interested in reading related papers.)

Comparison of Video See-Through (or VST) and Optical See-Through (or OST) like HoloLens -

1, on the overlay effect and display perspective (VST victory)

Optical perspective projects a digital picture onto a translucent display device through a special design, so the occlusion effect is not perfect. All of you who read Magic Leap's glasses patents issued at the beginning of the year know that they have spent at least seven pages describing their complex optical design to mitigate this occlusion problem. The optics of the optical perspective design is very complicated, and it is basically a trick to make small, large viewing angles. Those who tried HoloLens (about 40 degrees) can feel what I think is a small perspective.

At the same time, due to the complex design and processing difficulties, the cost of the OST will be high. However, translucency is translucency, and if you slow it down, it cannot be solved. At this point, VST beat OST. Since the real picture is captured by the camera and digitized before being displayed on the screen, the effect of occlusion can be easily achieved at a low cost. In this regard, VST can use algorithms to make real-time P-maps and let reality become your artboard.

2, on the actual picture delay and distortion (OST victory)

Due to the use of a camera, plus the need for the computer to process the display and digital content at the same time, VST's latency is much larger than OST's.

The VST head-display hardware is more like VR, and the refresh delay of the camera and display is congenital. In the OST display, the reality is that the light from the outside world is penetrated by the optical device, and zero indefinite zero delay. The video fluoroscopy in addition to refresh delay, but also need to correct the position of the camera and the optical and human eyes are not the same as the picture gap, there will be inherent delays. We spend a lot of time, through hardware and software optimization to reduce latency. However, this delay cannot be zero. At this point, the OST bursts VST.

3, on the VAC problem (tie)

Both OST and VST have VAC issues. This is the problem that when we use heads-up, the depth perception mechanism causes dizziness when there is a conflict between the eyes (Vergence) and the eyes (Accommodation).

Interested readers are free to consult the Vergence Accommodation Conflict or Conflict.

(Lei Feng network (search "Lei Feng network" public concern) Note: Regarding the VAC phenomenon, you can view this parsing article " has been plaguing virtual reality VAC phenomenon, really no solution? " )

Magic Leap's big patent also has a lot of techniques that describe the problem of slowing down the light field. Before they shipped, on this issue, the current OST and VST were tied.

| Geeks, please read on

VMG-PROV is a video perspective exploration tool with limited performance. I hope that geeks will realize the risk of purchasing PROV through the following description.

The problem of delay has been greatly improved in the framework of VMG-MARK. I will tell you the details at the end of the article. The following figure is taken from the demo project that we are going to open-source. It is possible to superimpose a digital model on a desktop without a preset marker.

In this open source Demo, VMG-PROV captures the real picture through a binocular camera, passes the FPGA processing, and transfers it to the connected PC through the USB3.0 interface. After the real picture is calibrated by the software, the Visual SLAM algorithm starts computing, superimposing the preset digital model to the correct position. After that, the screen is transferred to the screen on the head-level display via HDMI. This open source project contains:

1. Hardware details and schematics of VMG-PROV

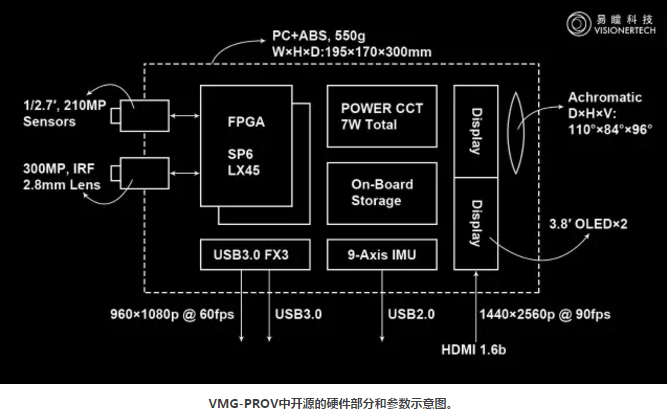

As shown in the figure below, the VMG-PROV hardware parameters are labeled and listed in the table below. The video image captured by the camera is processed by the FPGA and passed to the connected PC. Finally, the SLAM overlays the digital model to the human eye. So for the AR, VMG-PROV requires the PC to complete large video throughput, so it is recommended to use a high-end desktop.

As an MR head, the parameters of VMG-PROV can only reach the entry level of VR. I think that to make amazing applications on MR, this generation is not enough. This is why I hope everyone will use this product as a tool for learning and exploring. At the same time, we will also give us more feedback on improvements. To make this possible, we will provide VMG-PROV hardware schematics.

2. Hardware description language for processing images on FPGA

The key point of the VST is better than the OST is the modification of the real picture , in simple terms, is through the algorithm real-time P map.

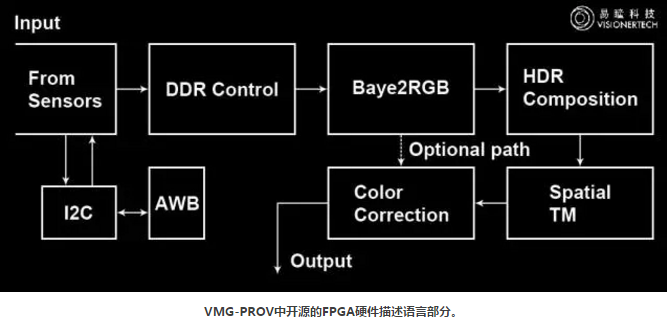

If the algorithms tested in software can be implemented or accelerated through FPGAs, it is helpful to reduce the delay. In our open source project, we included an MR demo, Real-Time HDR Composition & Tonal Mapping, which was completely written into the FPGA via logic.

As shown in the figure below, the FPGA controls the Sensor to convert the exposure at high speed and then selects the best part of each picture from dark to bright through the synthesis circuit.

FPGA is a kind of magical chip that is a transformer in the chip. Engineers who have worked on FPGAs know that FPGAs are not something that ordinary people do.

The figure below shows the part of the hardware description language in the FPGA in the project that we will open source. The raw data coming in from the Sensor will be completely processed by the ISP on the FPGA, and then the synthesized HDR will be transferred to the PC via USB3.0. I believe that the open source Verilog HDL of the above project can attract courageous masters to join in chip-level MR exploration. Through the FPGA with the needs of the PC side, to further reduce the VST delay problem.

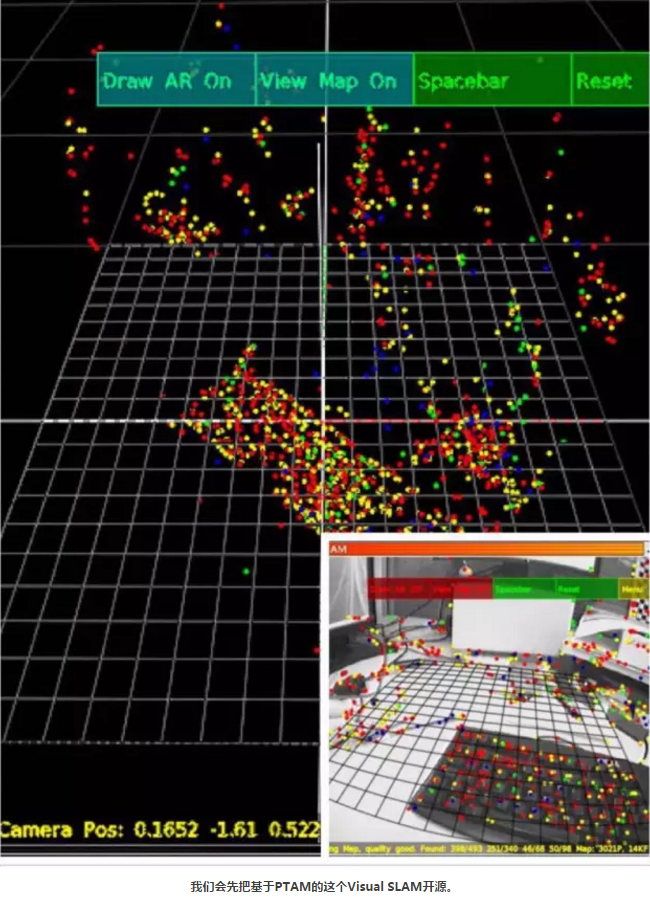

3. Binary SLAM source code based on PTAM

The SLAM used by the VMG-PROV is based on the binocular RGB camera and was modified by the famous PTAM. This project superimposed Miku in the video above into our glasses. After PTAM, we will also fuse sensor data and use different methods (like ORB-SLAM, or LSD-SLAM) to achieve more stable overlays. I didn't draw a picture of SLAM. The general open source content includes binocular camera calibration correction, FAST feature point extraction, RANSAC & Bundle Adjustment, and Miku's model. Need to use Unity3D friends, the project also has this set of SLAM import method. At the same time, when you look at the source code, you can refer to the relevant information of PTAM to modify it.

| Ordinary developers please wait

Speaking of this, I think we all have some judgment on the need to purchase this "geek version." Since VMG-PROV has not yet reached the application level, you need to wait for VMG-MARK (developer version) to be used in VMG applications. According to the current progress, our R&D team has solved most of the technical issues of VMG-MARK. We call VMG-MARK's hardware and software architecture as VLLV (Very Low Latency Video See-Through), which will significantly slow down the latency problem of video perspective (it is not the solution to the slowdown, the solution is Zero Latency). Therefore, developers who wish to have a landing application are encouraged to use the MARK product instead of the VMG-PROV geek version.

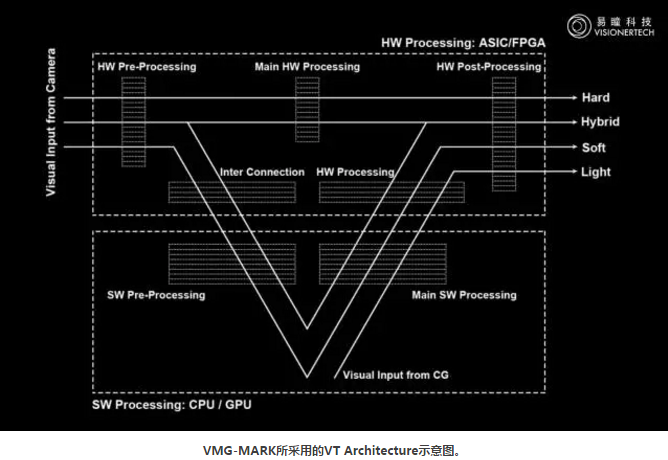

The following figure shows the architecture of VMG-MARK. The initial design is to reduce the amount of computing required by video throughput on the PC, and the high-definition high-frame real-screen video stream is directly transmitted from the Sensor to the screen without going through the computer. After that, it slowly evolved into four modes.

We call this architecture VT Architecture. Its four operating modes are:

Hard mode

The video signal of the real picture is only processed through FPGA and ASIC, then immediately hit the screen. This path has the lowest delay, but it is the most difficult to develop. It is not difficult to write FPGAs, but courage.

2.Hybrid mode

This path is best suited for the development of AR applications.

As shown in the figure, the head-on FPGA will distribute the video to the connected PC according to the requirements. Then, the digital model with posture information will be integrated into the original high-definition picture through the FPGA. In this way, we can significantly reduce the delay of the real-world picture, and at the same time we can save most of the original computational resources needed, thus reducing the need for computer configuration.

3.Soft mode

Interested readers have found that this path is the path to VMG-PROV. This path is the most powerful, but it has the highest latency. The video stream passes through the FPGA/ASIC and is then passed to the computer and finally displayed on the screen. Soft mode is suitable for early verification and experimentation. The project written by Soft should be optimized according to application requirements and implemented in other modes.

4.Light mode

Light mode is well understood, is the VR head with Visual SLAM. Although the front-end camera does not image, it detects head-on pan movement at all times. This is not the same as Lighthouse's outer-to-interior detection method. Our subsequent development of a better SLAM will also be open source. I hope everyone will wait.

Written to this, almost too. As a small team, we want to be our ideal geek. I believe there are many people around us who share the spirit, explore the spirit, have the heart, have the ability, and will support us. We hope to share our achievements with such people. This is also the most important reason we plan to open source.

KW4-Micro Switch Quick Connect Terminal

quick connect terminal micro switch,Basic Micro Switch,Quick Connect Terminal 2 Pin

Ningbo Jialin Electronics Co.,Ltd , https://www.donghai-switch.com